Did you know? Modern neural networks can now outperform humans at complex tasks such as image recognition and game strategy—a development inconceivable just a decade ago. Neural networks have become the driving force behind today’s revolution in artificial intelligence, enabling profound leaps in deep learning and machine learning . Dive in as we break down the core concepts, real-world impacts, and future horizons of neural networks—whether you’re curious, just getting started, or ready to master the next wave in AI.

What You’ll Learn: - The fundamentals and types of neural networks - Their role in deep learning and machine learning - Real-world examples and upcoming innovations

Neural Networks in Modern AI: A Statistical Leap Forward

Neural networks have created a seismic shift in how artificial intelligence systems understand and interact with the world. Unlike classic decision-trees or rule-based algorithms, modern neural nets are capable of learning intricate and subtle data relationships hidden in massive amounts of training data . This capability propels advancements in everything from voice assistants to self-driving cars.

For example, in computer vision , deep neural network models can recognize faces with higher precision than older algorithms by leveraging multiple hidden layers to detect subtle features. In natural language applications, neural network models can not only translate speech but interpret intention, thanks to advances in recurrent neural and transformer-based architectures. Today’s largest financial firms use deep learning for fraud detection, finding deceptive patterns that would elude manual analysis. This leap in problem-solving ability marks a new paradigm in machine learning .

What You Need to Know About Neural Networks

- Discover the fundamentals of neural networks and how they underpin deep learning and machine learning.

- Explore real-world applications where neural networks outperform traditional algorithms.

- Understand the transformative role of neural networks in computer vision, natural language processing, and artificial intelligence.

Defining Neural Networks: Key Concepts and Terminology

What is a Neural Network? The Basic Building Blocks

- Definition and structure of neural networks

- Artificial neurons and the architecture of deep neural networks

- Differences between neural net and traditional algorithms

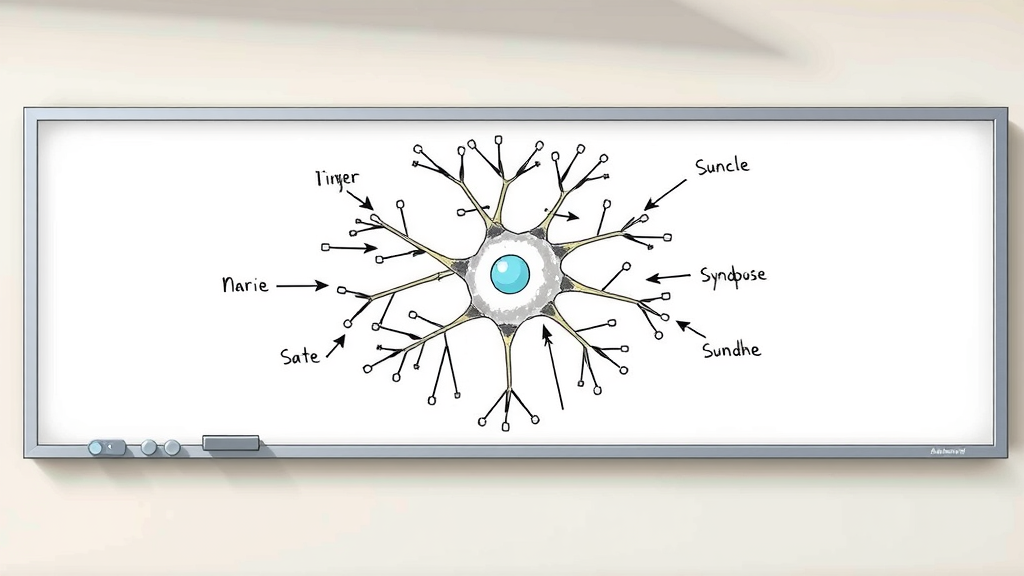

A neural network is a computational model inspired by how the human brain works. At its core, it’s composed of interconnected units called artificial neurons , organized in layers that pass data through weighted connections. The basic structure involves an input layer , one or more hidden layers , and an output layer . Each artificial neuron processes signals and passes on results, much like nerve cells in your brain.

Unlike traditional algorithms that follow predetermined rules, neural networks learn patterns directly from the data using sophisticated learning algorithms . As information moves from the input layer through hidden layers to the output, the network fine-tunes its internal weights—adjusting what it “remembers” and “forgets.” This enables deep neural networks to adapt to new challenges like voice recognition, object classification, and even generating new content.

From Neural Net to Neural Networks: Historical Breakthroughs

- Key milestones in neural network development

- Influential scientific breakthroughs shaping deep learning

- Evolution of supervised learning and its integration within neural networks

The history of neural networks began in the 1940s with “perceptrons,” the earliest artificial neural models. For decades, progress was slow due to limited computational power and a lack of large training data . Major breakthroughs came in the 1980s with the development of backpropagation , allowing networks to learn more efficiently. This paved the way for multi-layer architectures—what we now call deep neural networks .

By the 2010s, the convergence of big data, affordable GPUs, and improved learning algorithms propelled neural networks into the mainstream. Landmark events, like a convolutional neural network winning the ImageNet competition and AlphaGo defeating a world champion at Go, highlighted their superiority. Ongoing breakthroughs in supervised learning , reinforcement learning , and transformer architectures continue to expand what neural networks can achieve.

How Neural Networks Drive Deep Learning and Machine Learning

Deep Neural Networks and Complex Pattern Recognition

- Features of deep neural networks and deep learning methods

- Advantages of layers within deep learning neural networks

- Examples of how neural networks excel at complex data analysis

Deep neural networks are distinguished by their use of multiple hidden layers that allow them to extract increasingly abstract features from input data. In contrast to shallow networks, deeper models can spot nuanced relationships in complex datasets—like fine distinctions in handwriting or the mood of a sentence in natural language processing . Each hidden layer captures new combinations of information, improving the model’s accuracy.

For example, in image recognition , the bottom layer of the network might focus on detecting edges, while higher layers synthesize those into complex patterns like eyes or wheels. This layered approach, characteristic of deep learning algorithm s, ensures state-of-the-art performance in areas such as speech recognition, facial detection, and artificial intelligence content generation.

Machine Learning vs Neural Networks: What Sets Them Apart?

- Relationship between machine learning and neural networks

- The role of supervised learning, reinforcement learning, and unsupervised learning

- When to use neural networks over traditional machine learning techniques

Machine learning refers to a broader field of computer science where algorithms learn from data rather than programmed instructions. Neural networks are a subset within machine learning, specializing in modeling complex, non-linear relationships with multiple hidden layers . While decision trees or “shallow” learners work best for structured, tabular data, neural networks thrive in unstructured domains: images, text, sound, and video.

Supervised learning dominates many practical uses of artificial neural networks , such as labeling objects in photos. Reinforcement learning excels at tasks where AI needs to optimize decisions over time, such as in robotics or gaming. Unsupervised learning , meanwhile, helps uncover hidden groupings within unlabeled data. When data complexity and scale increase, deep learning with neural networks is often the best approach.

Core Types of Neural Networks: Architectures and Applications

Convolutional Neural Networks in Computer Vision

- How convolutional neural networks process images

- Key use cases: image recognition, facial detection, automated driving

- The science behind convolutional layers

Convolutional neural networks (CNNs) are tailored for processing visual data. These networks use specialized layers called convolutional layers that scan small windows of an image, searching for spatial relationships—edges, curves, and textures. This structure makes CNNs the gold standard for computer vision tasks: they can rapidly identify objects, faces, and even interpret street signs for autonomous vehicles.

In practice, CNNs have revolutionized image recognition and automated video analysis, enabling high accuracy in systems such as photo tagging, facial identification, and vehicle perception. Self-driving cars, for example, combine multiple CNNs to read road signs, detect pedestrians, and anticipate traffic, making real-time decisions safer than ever before.

Recurrent Neural Networks for Natural Language and Sequence Data

- Basics of recurrent neural networks

- Natural language processing with neural networks

- Applications: speech recognition, translation, predictive text

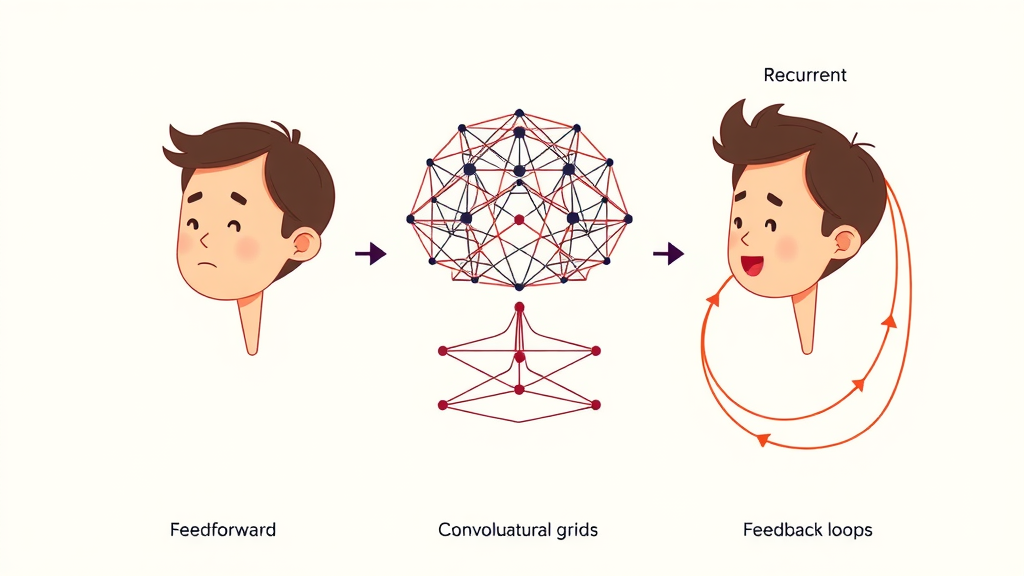

Recurrent neural networks (RNNs) are specially designed to interpret data with a sequential structure—think words in a sentence or frames in a video. Unlike feedforward networks, RNNs maintain an internal memory of previous inputs, allowing them to “remember” context. This memory is crucial in natural language processing , where meaning often depends on word order and earlier statements.

RNNs are now commonplace in speech recognition systems (turning spoken words into text), language translation software (like Google Translate), and predictive text in smartphones. Their ability to “understand” the flow of language is a cornerstone of advanced AI chatbots—like ChatGPT—which use even more advanced architectures based on the same principles.

Specialized Neural Nets: Artificial Neurons, Deep Neural Networks, and Beyond

- Role of artificial neuron and artificial neurons in learning

- What makes deep neural networks so effective

- Cutting-edge neural net architectures: transformers, generative adversarial networks

The power of deep neural networks comes from the sheer number and sophistication of their artificial neurons . Each neuron makes a simple decision; together, vast networks can learn highly complex representations. Recent innovations have birthed specialized networks—like transformers (which power ChatGPT) and generative adversarial networks (GANs) for image synthesis. These architectures unlock advanced tasks, from summarizing lengthy documents to creating photorealistic artwork.

The effectiveness of these specialized neural nets is rooted in their ability to self-organize—discovering features and relationships that might be invisible to even experienced human experts. As researchers continue to evolve these models, the potential for automation, creativity, and scientific discovery grows in tandem.

Neural Networks in Action: Real-World Uses and Industry Impact

How Deep Learning and Machine Learning Are Changing Industries

- Neural networks in healthcare (diagnostics, personalized medicine)

- Impacts in finance (fraud detection, trading algorithms)

- Partnering neural networks with computer vision in autonomous vehicles

- Language processing applications in customer service and content generation

From CT-scan analysis in hospitals to high-frequency trading in stock markets, artificial neural networks are redefining entire industries. In healthcare , deep learning models review patient scans with precision rivaling top medical experts—offering faster, more consistent diagnoses and enabling personalized treatment plans tailored to genetic and lifestyle data.

In finance , neural nets analyze millions of transactions in real time, flagging fraudulent behavior that would slip past traditional rules-based systems. Autonomous vehicles blend computer vision with language processing and deep learning to interpret road signs, pedestrian behaviors, and driver voice commands for safer travel. Even customer service bots draw on language models to answer questions, process requests, and automatically route calls. The result? More intelligent, adaptive, and human-like systems than ever before.

Watch a short explainer on how today’s deep learning neural networks drive breakthroughs in computer vision, chatbots, and autonomous vehicles.

The Science Behind Neural Network Training

Supervised Learning and Backpropagation Explained

- Overview of supervised learning in neural networks

- Role of backpropagation and gradient descent

- Interpretation of neural network weights and biases

Most neural networks learn through a process called supervised learning . Here, the network is shown many examples of data—each paired with the correct “answer.” The system makes predictions, then calculates how far off its guesses are using a loss function. Through backpropagation and gradient descent , the network tweaks its internal weights and biases to minimize errors, improving accuracy with each training cycle.

Think of weights as the “importance” of each input or connection. As training data flows from input to output layers, the network constantly recalibrates these weights, shaping its structure to become an expert in the given task—be it recognizing handwritten digits or translating between languages.

Table: Comparing Neural Network Training Methods

| Training Method | Neural Network Type | Common Use Cases | Strengths | Weaknesses |

|---|---|---|---|---|

| Supervised Learning | Feedforward, Convolutional, Recurrent | Image recognition, speech recognition, translation | High accuracy, interpretable results | Requires labeled data, can overfit |

| Unsupervised Learning | Autoencoders, Generative Adversarial Networks | Clustering, anomaly detection | Finds hidden patterns, no labels required | Harder to evaluate, may find meaningless patterns |

| Reinforcement Learning | Q-Learning, Deep Q Networks | Robotics, game AI, optimization | Good for sequential tasks, adapts over time | Resource intensive, slow to converge |

See neural network training come alive in an animated demo that showcases how layers adjust internally for better accuracy.

People Also Ask: Demystifying Neural Networks

What is the neural network?

- A neural network is a computational model inspired by the human brain, composed of interconnected artificial neurons that process and analyze data to recognize patterns and solve specific tasks.

Is every AI a neural network?

- No, while neural networks are a core technology in modern AI, not every AI system uses a neural network. AI also includes rule-based systems and algorithms outside deep learning.

Is ChatGPT a neural network?

- Yes. ChatGPT is built on advanced deep neural networks, specifically transformer neural net architectures, which power its natural language processing abilities.

What are the three types of neural networks?

- Feedforward Neural Networks: The most basic type, using unidirectional connections.

- Convolutional Neural Networks: Specialized for image and vision tasks.

- Recurrent Neural Networks: Designed for sequential or time-dependent data, such as natural language.

Challenges, Innovations, and the Future of Neural Networks

Current Challenges in Deep Learning and Machine Learning

- Overfitting and data requirements

- Need for explainable AI in neural networks

- Computational costs and environmental impact

Despite stunning successes, deep learning still faces major challenges. Overfitting—when a neural net “memorizes” data rather than learning general rules—remains a persistent risk, especially with limited training samples. High accuracy often demands huge datasets and intensive computation, raising concerns about energy consumption and accessibility.

The rise of complex deep neural networks also increases the need for explainable AI : users and regulators want transparent systems that clarify how decisions are made. As neural networks power more aspects of daily life, ethical and environmental scrutiny will grow, pushing innovation in both technology and oversight.

Breakthroughs on the Horizon: Neural Networks and AI Evolution

- Cutting-edge research in deep neural networks

- Potential of quantum neural nets and hybrid AI

- The role of neural networks in future machine learning advancements

Tomorrow’s breakthroughs will stem from integrating neural networks with advances like quantum computing, new kinds of artificial neurons, and even blending symbolic and deep learning approaches. Emerging architectures—like transformers and quantum neural nets —promise greater efficiency, adaptability, and creativity. Researchers are also developing hybrid models that combine neural networks with reasoning, giving rise to next-generation AI capable of abstract thought and learning with fewer data.

As innovation surges, neural networks will redefine what’s possible in robotics, scientific discovery, and personalized technologies—reshaping society’s relationship with artificial intelligence.

“Neural networks have fundamentally changed our approach to artificial intelligence, unleashing innovation across every industry.” – Leading AI Researcher

Watch a leading AI researcher share insights into the future landscape of neural networks and deep learning.

Frequently Asked Questions about Neural Networks

-

How do neural networks differ from the human brain?

While inspired by the human brain, neural networks use math and code to model learning, lacking biological features like emotions or organic memory structures. -

What skills are necessary to work with neural networks?

Key skills include programming (Python), understanding of linear algebra, statistics, and knowledge in data science and machine learning concepts. -

Can neural networks learn without labeled data?

Yes. Approaches like unsupervised learning and self-supervised learning let neural networks find structure in unlabelled data, although with less direct guidance. -

Do neural networks have ethical risks?

Yes. Neural networks, like all AI, must be carefully designed to avoid bias, privacy violations, and unintended consequences, making ethics a crucial area of research.

Highlight Reel: Core Takeaways on Neural Networks and Deep Learning

- Neural networks form the backbone of modern deep learning and machine learning systems.

- Convolutional and recurrent neural networks address specialized tasks in AI.

- Ongoing innovation promises new frontiers for neural network technology.

Take the Next Step: Learn More About Neural Networks

- Explore courses, research papers, and open-source tools to deepen your knowledge of neural networks. Embark on your own AI journey with the latest neural net developments.

Ready to shape the future of AI? Start learning, coding, and experimenting—neural networks are yours to unlock.

Add Row

Add Row  Add

Add

Write A Comment